Zero-Shot Neural Machine Translation System For Low-Resource Languages

A comprehensive overview of zero-shot neural machine translation systems for low-resource languages.

Introduction

In recent years, neural machine translation (NMT) has made significant strides, particularly in bridging language barriers for high-resource languages. However, low-resource languages often face challenges in NMT due to limited parallel corpora for training. To address this issue, zero-shot NMT systems have emerged as a promising approach. These systems enable translation between language pairs without explicit parallel data, offering a potential solution for low-resource languages.

Zero-Shot NMT Framework

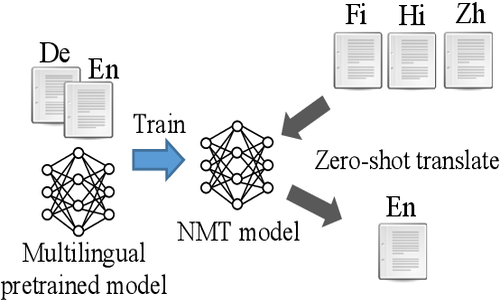

Zero-shot NMT relies on multilingual models trained on diverse language pairs, allowing them to generalize across languages. The core idea is to leverage shared representations across languages to facilitate translation even in the absence of direct training data for a particular language pair. By learning to map sentences from multiple languages into a shared embedding space, zero-shot models can infer translations between language pairs not encountered during training.

Training Strategy

Training a zero-shot NMT model involves pretraining on a large multilingual corpus, followed by fine-tuning on language-specific data. During pretraining, the model learns to encode and decode text from various languages, capturing universal linguistic features. Fine-tuning then adapts the model to the target language through additional training on available parallel data, enhancing translation quality for low-resource languages.

Challenges and Solutions

Zero-shot NMT faces several challenges, including data sparsity, domain adaptation, and language divergence. Limited parallel data for low-resource languages can hinder translation quality, requiring innovative techniques such as data augmentation, transfer learning, and unsupervised pretraining. Domain adaptation techniques help the model generalize to specific domains within low-resource languages, while addressing language divergence involves mitigating differences in syntax, vocabulary, and linguistic structure.

Evaluation and Performance

Evaluation of zero-shot NMT systems typically involves assessing translation quality using metrics such as BLEU, METEOR, and TER. Performance varies across language pairs and resource levels, with translation quality often lower for low-resource languages compared to high-resource counterparts. Nonetheless, zero-shot NMT systems demonstrate promising results, showcasing their potential for expanding access to translation services for underrepresented languages.

Future Directions

Future research in zero-shot NMT for low-resource languages should focus on improving translation quality, scalability, and resource efficiency. Techniques such as semi-supervised learning, active learning, and zero-resource translation hold promise for enhancing model performance with limited data. Additionally, efforts to build larger and more diverse multilingual corpora can further empower zero-shot NMT systems to bridge language barriers effectively.

Conclusion

Zero-shot NMT systems offer a promising solution for facilitating translation between low-resource languages, leveraging shared representations and multilingual training to overcome data scarcity. While challenges remain, ongoing research and innovation hold the potential to significantly improve translation quality and accessibility for diverse linguistic communities, promoting inclusivity and communication across language boundaries..